How our sound perception affects music composition and mixing

Hearing is very important as a music producer, but our ears are a nuisance when you’re trying to stay objective about your mix. This is because the human auditory system doesn’t just transmit sonic vibrations straight from the air to your consciousness; the brain affects our sound perception and colours the raw sensory input through its own nonlinear response.

To make the best possible creative decisions, we must be aware of these psychoacoustic characteristics, especially when mixing and mastering music.

So In this blog post I want to point out some scientific fundamental psychophysic principles that you can use to your advantage to produce and mix music, without being fooled by your auditory perception.

Limits of hearing

The audible range for humans ranges from about 20 Hz to 20 kHz. Infants can hear sounds slightly higher than 20 kHz, but as people age, they lose some of their ability to hear higher frequencies. For most adults, the upper limit drops to around 15–17 kHz. What does that mean for mixing and mastering: Since we can’t hear frequencies below 20Hz or above 20 kHz, it makes sense to cut all the frequencies below or above. Especially with the lower frequencies it can be advisable to get rid of them as even if we don’t hear them, they are present and occupy unnecessary headroom in the mix. Removing them helps clear up the mix.

Equal-Loudness contours: How our hearing colours sound

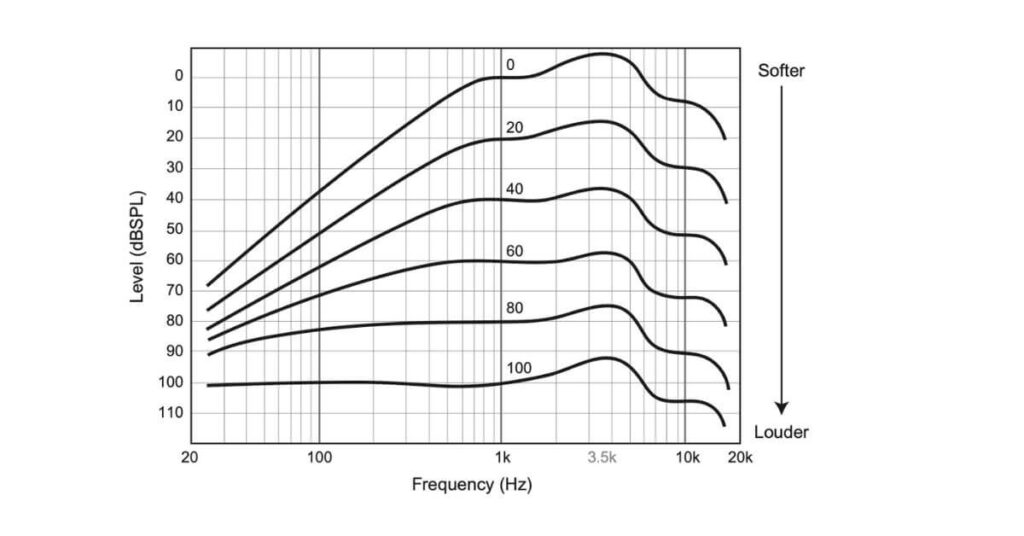

Our perception of sound is “coloured” by how our ears and brain perceive different frequencies at various loudness levels. This effect is captured by what are called equal-loudness contours, a phenomenon first mapped by Fletcher and Munson in their famous experiment, later refined by Robinson and Dadson. Today, we use the ISO 226 standard to represent these findings.

Equal-loudness contours, often also referred to as the Fletcher-Munson Curves, show that our ears don’t hear all frequencies equally (here upside down). Instead, our sensitivity to different parts of the frequency spectrum varies depending on the volume. These curves, also known as phon curves, are based on a 1 kHz reference level. For instance, on the 20-phon curve, if a sound at 1 kHz is played at 20 dB SPL (sound pressure level), a much lower frequency, like 100 Hz, would need to be played at 50 dB SPL to sound equally loud to us. This illustrates that low frequencies need to be much louder than mid-range frequencies for us to perceive them at the same level. 1

Interestingly, our ears are most sensitive to frequencies around 3.5 kHz – close to the natural resonant frequency of the ear canal. This is also the range of a baby’s cry, and critical sounds, like ambulance, police sirens or smoke detectors, which may explain why they grab our attention so easily! But what does this mean for mixing or mastering music?

Mixing at different levels

The shifting perception of frequencies as volume changes raises important questions for mixing and producing music. If our perception of a mix’s balance depends on the playback level, how can we create a balanced mix that holds up across different volumes? The answer lies in constantly checking your mix at various levels and ensuring that it remains balanced regardless of how loud it is played.

For instance, at lower levels, we naturally hear less bass and treble. If your kick drum is only prominent in the low frequencies, it might completely disappear at low volumes. To prevent this, you can adjust the kick to also have a presence in the high-mid range, ensuring it remains audible even at lower playback volumes.

Many professionals believe that a solid mid-range is the foundation of a well-balanced mix, as it remains relatively stable across different levels. If the highs and lows are carefully crafted to extend from the mids, the overall mix will be more consistent, whether it’s played loudly or softly.

Another useful guideline is that if a mix sounds good at low volumes, it’s likely to hold up well when played loudly. The reverse is not always true—what sounds great at high volumes may fall apart when played quietly.

Finally, it’s important to consider the context in which your mix will be heard. For example, electronic dance music is typically played at higher volumes, so you can use that louder level as a reference point during mixing. By knowing the likely playback environment, you can tailor your mix to sound best under those conditions.

Room response

There’s another reason why louder music is perceived as better – if you are listening through monitor speakers. When listening to music at lower volumes, we primarily hear the direct sound from the monitor speakers, with minimal reflections from the room. This is because the sound energy is weak, resulting in fewer reflections off walls and surfaces. However, as the volume increases, more sound energy is produced, leading to stronger reflections and creating what’s known as the room response. This results in a more immersive experience, as the sound feels more enveloping and engaging.

To illustrate this, try playing a track quietly through your monitor speakers; you’ll likely perceive the sound as coming from a defined space between the speakers. Increase the volume, and you’ll notice the sound image expanding, transforming from a flat experience into a more three-dimensional one. This phenomenon highlights why louder music is often perceived as better, especially when using monitor speakers.

Referencing

Understanding how our hearing perception works is crucial in the mixing process. As we’ve discussed, our ears are not equally sensitive across the frequency spectrum, meaning that our perception of a mix can be easily influenced by volume levels and the specific frequencies being played. This is where referencing comes into play as an effective technique to counteract these perception biases and help us make more informed mixing decisions.

Why Referencing Matters: Our ears can be misled by various factors, such as changes in volume or our familiarity with our own mix. A track that sounds balanced and polished at one moment may feel different when played back at a different volume or in another environment. By using a reference track – a song that embodies the sound quality and balance you aim to achieve – you can gain a stable benchmark. This benchmark helps you maintain perspective on your mix’s tonal balance, dynamics, and overall character, ensuring it holds up against the expectations shaped by our hearing perception.

Spatial location – Add room to your mix

Humans rely on binaural hearing, using both ears to determine the direction of sound. When sound waves reach our ears, they create slight delays and loudness differences due to their travel around our heads, a process called diffraction. High-frequency sounds are easier to localize because our brains can detect differences in amplitude and arrival time, allowing us to pinpoint their source effectively.

Conversely, low-frequency sounds pose a challenge for localisation due to their longer wavelengths, which produce less pronounced differences in amplitude and time. In this case, our brains rely on phase differences between sound waves arriving at each ear to determine direction.

The Haas effect

The Haas Effect, or precedence effect, named after Helmut Haas around 1949, further illustrates how we perceive sound location. For instance, if two trumpet players sound the same note – one closer and louder (Player A) and the other farther away (Player B) – our perception will favour Player A. This is because the first sound we hear influences our perception of its source, even if the volumes are equalised later. Research shows that when the time delay between two sound sources is between 5 and 30 milliseconds, the delayed sound must be 10 decibels louder for listeners to perceive them as equal. 2

Interestingly, for very short delays of about 2 – 5 milliseconds, the location is primarily judged based on the initial sound. As the delay increases beyond 5 milliseconds, the perceived level and spaciousness of the sound grow while still retaining the apparent location of the initial sound. Remarkably, a reflected sound can even be louder than the direct sound – by as much as 10 dB – without interfering with localisation.

Taken together, these principles offer valuable insights for music production. To simulate a Haas delay, we can use a simple delay and EQ, tuning the delay time to between 1 and 40 milliseconds and it will create a sense of depth. By effectively managing high and low frequencies and considering the Haas Effect, producers can create a strong sense of space and depth in their tracks, enhancing the stereo perception.

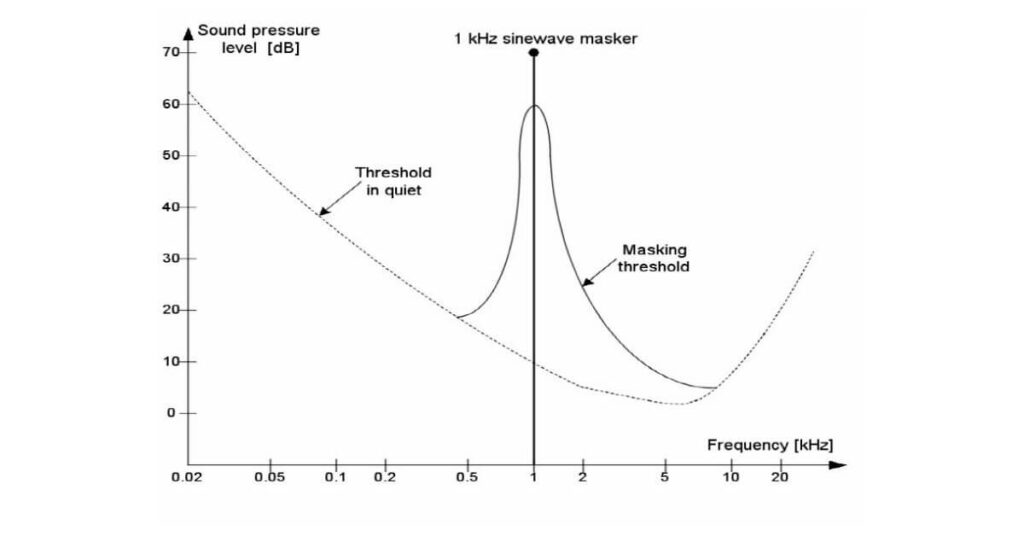

Frequency masking: Why louder sounds are more noticeable

Frequency masking is a phenomenon that occurs when one sound covers or “masks” another sound on the same frequency, making it difficult to hear the quieter sound. Essentially, louder sounds tend to dominate and obscure quieter ones, particularly when their frequencies overlap. This means that when you increase the volume of a particular instrument in a mix, it can enhance its perception by overpowering other sounds within the same frequency range. 3

In mixing, understanding masking is essential for achieving clarity and balance. When multiple instruments share similar frequencies, the louder instrument will often dominate, making it easier to perceive. For example, if a bass and a kick drum occupy the same low-frequency space, boosting the bass might make it more prominent, but it could also mask the kick. That is why sidechain compression is so commonly used, because it helps the kick cutting through the mix and not being masked by the bass.

To effectively use masking in your mixes, you can strategically manage the volume levels of individual instruments and make sure that instruments that share the same frequency range dont play at the same time. This careful balancing ensures that no single instrument masks the others, maintaining the intended sonic balancing

The missing fundamental

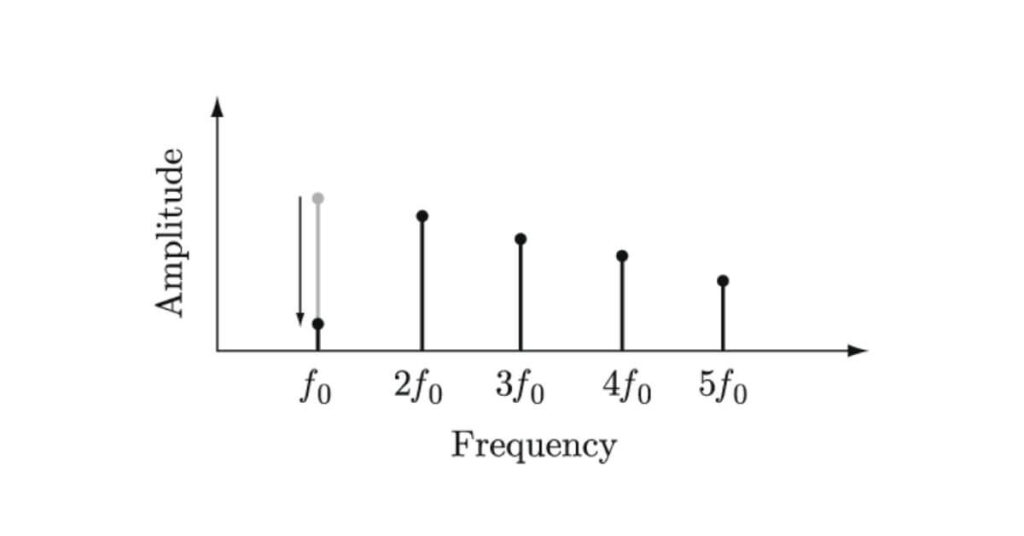

The “missing fundamental” phenomenon refers to our ability to perceive the pitch of a sound even when its lowest frequency, known as the fundamental, is absent. For instance, if a sound contains harmonics at 200, 400, and 600 Hz, but the 200 Hz fundamental is removed, we still perceive the pitch as 200 Hz. This perceived pitch is called the “residue pitch” or “virtual pitch,” and it arises because our brain processes the harmonics to fill in the gaps.

This phenomenon occurs because the brain can reconstruct low pitches using information from higher harmonics, allowing us to perceive pitches even in noisy environments where the fundamental frequency might be masked. Essentially, our auditory system compensates for the absence of the fundamental, leading to a rich and cohesive sound perception. When it comes to production and mixing, grasping the concept of the missing fundamental can be extremely beneficial. For instance, while working with bass sounds or low-frequency instruments, you can achieve a sense of depth and presence without having to overly emphasise the fundamental frequency. Instead, you might focus on enhancing the harmonics through techniques like saturation, which can enrich the perception of the low end without excessive boosting. Keeping this phenomenon in mind also allows you to create mixes that translate well across various monitoring environments, such as phones or laptop speakers, where these harmonics can give the impression of a full low end, even if those speakers are unable to reproduce the actual low frequencies.